The value of think-aloud interviews to improve the assessment of scientific skills in C*Sci programs

Authors and affiliations

Amy Grack Nelson, Science Museum of Minnesota

Evelyn Christian Ronning, Science Museum of Minnesota

Introduction

Getting people to participate in science, particularly through C*Sci1 projects, is an instrumental way to increase their interest in scientific discovery. As the public is invited to participate in scientific research, understanding the extent of their skills is key to providing training and resources that can develop participants’ skills.

The project Streamlining Embedded Assessment to Understand Citizen Scientists' Skill Gains (DRL #1713424) included the creation of shared, embedded assessment tools that C*Sci projects can use. The embedded assessments created by the project provided an authentic way for volunteers to demonstrate these skills by completing performance-based tasks that could easily be embedded into pre-existing C*Sci program activities (Becker-Klein et al., 2016). The project focused on creating embedded assessments to measure two scientific inquiry skill areas frequently used by C*Sci volunteers: “record standard observations” and “notice relevant features.” For the purpose of the assessments, standard observations were defined as “those that provide a consistent or uniform set of measures to describe a phenomenon or event, using a standard unit that can be duplicated or shared by all observers” (Becker-Klein et al., 2021). They include temporal, environmental, spatial, and biological observations. “Notice relevant features” was defined as the ability of volunteers to notice features of an organism, match those features with existing knowledge about that organism, and then use that knowledge to identify the organism using a classification system (Becker-Klein et al., 2021). Please see the project website for more information about the assessments and the project.

This blog post focuses on an often overlooked aspect of assessment design: validation. We share how we gathered validity evidence for both a video-based and a photo-based embedded assessment using think-aloud interviews and how we learned from this process. Although this project is focused on assessments for C*Sci programs, our process and learnings can be useful for developing performance-based embedded assessments in any type of informal science education program.

Ensuring the quality of embedded assessments

How do we ensure that performance-based embedded assessments for C*Sci and other kinds of informal science education programs are measuring what we intend them to measure (validity) and doing so reliably? There are various ways to gather validity evidence for embedded assessments and ensure reliability. This project used expert reviews and think-aloud, or cognitive, interviews (American Educational Research Association, American Psychological Association, & National Council on Measurement in Education, 2014).

For the expert review, four reviewers (with expertise in measurement, evaluation, scientific inquiry, and C*Sci) provided feedback on how well the individual tasks on each of the two assessments aligned with the project’s definition of the skill area measured. In addition, if needed, they suggested how the task might be revised to better align with the skill. For each assessment, we conducted think-aloud interviews with 10 volunteers from a particular C*Sci program. We asked volunteers to talk out loud while completing the assessment tasks so we could hear their thinking process (Beatty and Willis, 2007). This approach helped to surface any instructions or individual tasks that were confusing or misinterpreted. We also observed volunteers’ behaviors as they completed the assessment to gain additional insights. These insights allowed us to improve the embedded assessments before field testing.

Think-aloud process for a video-based assessment

The embedded assessment tested for the skill “record standard observations” was a video-based assessment embedded into volunteer training for the Michigan Butterfly Network. The five-minute video was meant to replicate the experience of someone walking in a prairie at a slow pace, observing butterflies and other insects as they would if they were collecting data for the project (view a short clip of the assessment). Volunteers were instructed to record butterflies they saw in the video using the same protocol they would use if they were doing it out in the field. The assessment was later scored using a rubric.

During the think-aloud, the volunteer narrated their process of observing and recording what they saw in the video using the Michigan Butterfly Network’s data collection protocol. Volunteers described why they decided to record (or not record) an insect or butterfly they observed, what they were writing on their data sheet, and why they identified the butterfly as they did (species, family, or unidentifiable). While the volunteer completed the assessment, we also noted their behaviors, such as looking in a field guide or squinting to see something on the computer screen.

Think-aloud process for a photo-based assessment

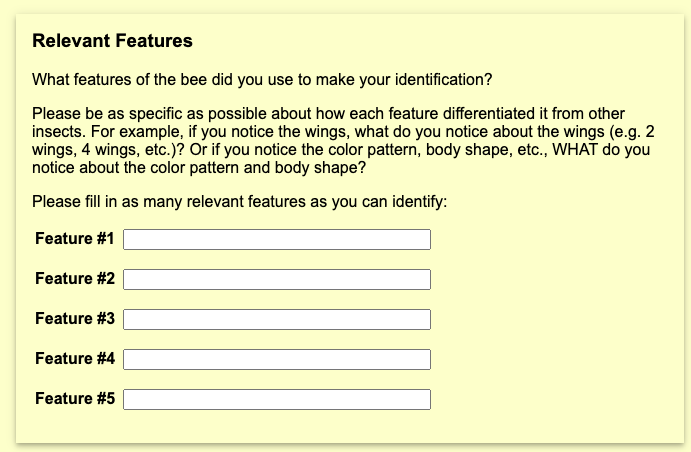

The embedded assessment tested for measuring the skill “notice relevant features” was a photo-based assessment embedded into the data submission form for the C*Sci program Beespotter. Beespotter volunteers collect photographs of bees that people have seen and submit the photos along with information about the identity of the bee pictured. The assessment portion of the submission form asks volunteers to identify up to five of the key features that supported their identification (see Image 1).

Image 1: Screenshot of Beespotter online embedded assessment

We asked volunteers to think aloud as they submitted 2–3 spottings (photographs) to the Beespotter portal. The purpose of the think-aloud interviews was to understand volunteers’ reasons for submitting particular images, their thought processes in determining what they considered relevant features for bee identification, and the resources they used to help them make their identifications. We also observed how the volunteer interacted with the online form.

What we learned from think-alouds

Think-alouds can bring to light areas where an embedded assessment may lack authenticity or introduce a more difficult situation than volunteers typically experience in a C*Sci program, which can affect the accuracy of the assessment of a volunteer’s skills. The Michigan Butterfly Network’s video-based assessment was meant to be embedded within their volunteer training and mimic the experience of collecting data in the field. However, the think-alouds uncovered some differences between the video and real-life field experiences. During the think-alouds, we learned that volunteers often took photographs of the butterflies they encountered, whereas in the assessment, they weren’t allowed to pause the video. In the field, volunteers had more time to identify the butterfly using a field guide and could zoom into the image to look at features to make a more accurate identification. This difference in protocol meant that volunteers using the video had fewer resources available to identify the butterflies during the assessment, which may have resulted in more incorrect identifications. Also, all of the volunteers noted that the pace of the person walking in the video was faster than what they felt was the project’s protocol for collecting data in the field, making it difficult to focus on some of the insects on the screen.

Both of these examples illustrate how the video-based assessment felt less authentic and ended up measuring the skill of recording standard observations at a more difficult level than was required of volunteers in the program. In fact, none of the volunteers got a perfect score, even though some of the volunteers had high levels of expertise. We know video assessments (as well as other types of image- and audio-based assessments) can never recreate a real-world experience, as they are just representations. Still, the think-alouds helped point to multiple areas where the assessment could be improved so that the level of skill required to do the assessment better approximates the skill level required in the field.

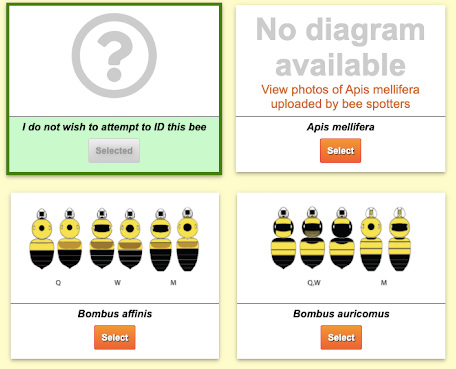

Think-alouds not only help to improve the assessment design, they can bring to light issues with assessment scoring. We saw this during the Beespotter think-alouds. The think-alouds uncovered that there was often a disconnect between what participants considered relevant features for identifying the bee in their photograph and what features were deemed relevant for scoring purposes. The assessment was scored based on relevant features at the genus level. However, the think-alouds uncovered that, for the most part, volunteers were listing relevant features at the species level. As they explained what features they used for identifications, they often compared the bee in their photograph to the drawings of different bee species on the submission form (see Image 2). Many of the features that participants listed indicated a higher-level and more nuanced understanding of bee identification. However, these participants would not have scored well on the assessment since they listed relevant features for bee species, not genus. Without the think-alouds, we would not have known why volunteers scored poorly on the assessment and would have made invalid inferences about the skill level of Beespotter volunteers.

Image 2: Screenshot of Beespotter species identification assessment

The value of think-aloud interviews

Think-alouds are a valuable step in the development and validation of not only performance-based embedded assessments but any type of measure to evaluate an informal science education program. As illustrated in our project, you do not need to interview a large number of people to gain valuable insights in order to improve a measure, the way that it is scored, and the interpretation of the results.

Think-alouds can also provide valuable insights into aspects of the program itself. Both embedded assessments in our project were based on actual data collection and submission protocols used by the C*Sci programs. Thinking through the assessment revealed insights into how the protocols were being interpreted and followed, providing useful information to program staff on how to improve the design of their protocols and their training around them.

—----

1 In this post, we use the more inclusive term C*Sci in place of “citizen science,” as adopted by the Citizen Science Association at their 2022 conference.

Acknowledgments

We'd like to acknowledge the Streamline Embedded Assessment project team that developed the embedded assessments in collaboration with a group of 10 C*Sci projects from across the country. This team includes Cathlyn Davis, Rachel Becker-Klein, Tina Phillips, Veronica DelBianco, Karen Peterman, Jenna Linhart, and Andrea Grover.

References

American Educational Research Association, American Psychological Association, & National Council on Measurement in Education. (2014). Standards for educational and psychological testing. Washington, DC: American Educational Research Association.

Beatty, P.C., & Willis, G.B., (2007). Research synthesis: The practice of cognitive interviewing. Public opinion quarterly, 71(2), 287––311.

Becker-Klein, R., Davis, C., Phillips, T., DelBianco, V., Grack Nelson, A., & Christian Ronning, E. (2021). Using a shared embedded assessment tool to understand participant skills: Processes and lessons learned. Manuscript submitted for publication.

Becker-Klein, R., Peterman, K., & Stylinski, C. (2016). Embedded assessment as an essential method for understanding public engagement in citizen science. Citizen Science: Theory and Practice, 1(1).